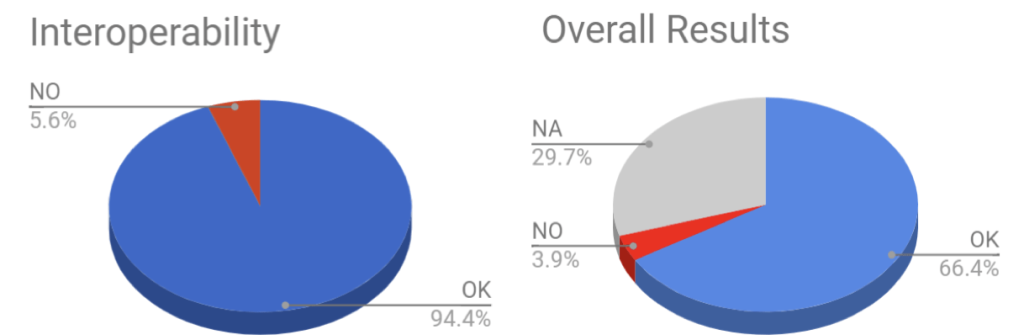

Some 95 percent of the 189 interoperability test sessions were successful, reports Gianpietro Lavado, a network solutions architect at Whitestack.

In the first post of this series, I shared a summary about the week I spent in France as part of the European Telecommunications Standards Institute‘s (ETSI) second edition of NFV Plugtests.

Now that the official results are out, let’s dive in!

A quick summary: 95 percent of the 189 interoperability test sessions succeeded. (A ‘session’ means a combination of different vendor’s VNF, VIM+NFVI and MANO solutions.) Overall, only about 30 percent of the features were not yet supported by one of the involved participants, compared to 61 percent at the first Plugtest session. This speaks very well to NFV readiness, considering that more than 50 percent of additional features were considered for this second edition, including multi-vendor network services, fault and performance management, enhanced platform awareness and multi-site deployments.

Note: all graphics and tables are as published in the second NFV Plugtests report

Here, I’ll focus on the results related to the NFVI and virtualized infrastructure manager (VIM, a.k.a. telco cloud), which as you may already know, are the physical compute, storage and network resources (NFVI), as well as their manager, that provide a cloud environment for virtualized network functions.

Twelve different NFVI+VIM combinations were tested, including 11 with different OpenStack distributions (from seven VIM vendors) and one with VMWare’s vCloud Director.

Since NFVI is expected to be based on off-the-shelf generic servers with homogeneous features, most challenges were presented to the VIM software. The following tables show some examples of the kind of tests that were included. There were basic tests like these, requesting basic lifecycle management:

… and more advanced ones, like these, requesting autoscaling based on VIM metrics:

As you can see, test descriptions cover the NFV system as whole, but, how do these tests relate to specific features that VIM platforms had to support?

VIMs were expected to support:

- Standard APIs: to provide a way for MANO platforms to connect and orchestrate VIM functions.

- Standard hypervisor features: to be ready to support different VM image formats that VNFs may require.

- Complete VM lifecycle: to be able to start, pause, stop and destroy VMs, along with their compute, networking and storage resources.

- Networking: to provide standard networking features for VMs to interconnect in a number of ways: private and public networks, NAT, security features, etc.

- Performance management: to collect and store performance metrics for all VMs, while being able to set thresholds and alarms for any of them, so that MANO platforms can monitor KPIs and trigger actions.

- Fault Management: to generate events and alarms and be able to propagate them to higher layers.

- Enhanced Platform Awareness (EPA): to be able to set EPA-related configurations on NFVI resources (SR-IOV, CPU pinning, NUMA topology awareness, Huge pages, etc.)

Cloud platforms are currently mature enough so most of the features are completely supported by both OpenStack distributions and VMWare vCD. Maybe the only one that today still presents some challenges at the VIM layer is EPA management, since this is a recent concept created during the NFV evolution to optimize the NFVI performance using techniques that should work with generic servers.

During these Plugtests, EPA testing was included in the optional set of sessions and the actual feature to test was not specified, so participants could choose to test simple control-plane related features like Huge Pages or CPU Pinning, or choose more complex, data-plane related features like SR-IOV, OVS-DPDK.

As evidenced in the following table, for Multi-VNF EPA there were only eight sessions, which included 26 tests. Only 12 could actually be run, while 14 asked for features that were not supported by participants. Out of these 12 tests, nine succeeded and three exposed interoperability issues. In short, only about a third of the EPA tests were successful.

We participated with Whitestack’s VIM solution, WhiteCloud, a multi-site telco cloud environment based on OpenStack Pike over generic servers and switches. I’m happy to report that it passed most tests successfully when integrating with different MANO solutions and VNFs. We didn’t focus our testing efforts for data-plane related EPA features this time, but we expect to disclose full (and successful) results in the third edition of these tests, expected to take place in May 2018.

In conclusion, because most features and interoperability worked fine for participants, we can say that at the NFVI/VIM level, NFV is ready for prime time! Of course, new features will keep being developed at both standards and by vendors at different speeds, which increases the importance for continuing this event.

For production environments, additional attention must be paid when evaluating telco cloud solutions that require high throughput from servers. Data-plane acceleration features of the NFVI and VIM must be carefully assessed and tested, including the most popular alternatives: OVS-DPDK and SR-IOV.

Stay tuned for the final post in this series, where I’ll share the results from the MANO perspective. Thanks for reading!