The direction should be set by open-source projects like Open Source MANO and ONAP for a future of “horizontal” deployments, says Whitestack’s Gianpietro Lavado.

Over the years, the term network functions virtualization may have deviated a little bit from its original definition. This post tries to get back to basics and to start a discussion around the possible deviations.

Let’s start by going back to 2012 and the question: What is NFV trying to achieve?

If you read the original white paper, you’ll see that it was written to address common concerns of the telco industry, brought on by the increasing variety of proprietary hardware appliances, including:

- Capital expenditure challenges

- Space and power to accommodate boxes

- Scarcity of skills necessary to design, integrate and operate them

- Procure-design-integrate-deploy cycles repeated with little or no revenue benefit due to accelerated end-of- life

- Hardware life cycles becoming shorter

You’ll also see that NFV was defined to address these problems by “…leveraging standard IT virtualization technology to consolidate many network equipment types onto industry standard high-volume servers, switches and storage, which could be located in data centers, network nodes and in the end-user premises…”

In other words, to implement our network functions and services in a virtualized way, using commodity hardware — but is this really happening?

Almost six years later, we’re still at the early stages of NFV, even when the technology is ready and multiple carriers are experimenting with different vendors and integrators with the vision of achieving the NFV promise. So, is the vision becoming real?

Some initiatives that are clearly following that vision are AT&T’s Flexware and Telefonica’s UNICA projects, where the operators are deploying vendor agnostic NFVI and VIM solutions, creating the foundations for their VNFs and Network Services.

However, most NFV implementations around the world are still not led by the operators, but by the same vendors and integrators who participated in the original root cause of the problem (see: “increasing variety of proprietary hardware appliances.”) This is not inherently bad, because they’re all evolving, but the risk lies in the fact that most of them still rely on a strong business based on proprietary boxes.

The result is that “vertical” NFV deployments, comprised of NFV full stacks, from servers to VNFs, are all provided, supported (and understood) by just a single vendor. There are even instances of some carriers deploying the whole NFV stack multiple times, sometimes even once per VNF (!), so we’re back to appliances with a VNF tag on them.

We could accept this is part of a natural evolution, where operators feel more comfortable working with the trusted vendor or integrator, while trying to start getting familiar with the new technologies as a first step. However, this approach might be creating a distortion in the market, making NFV architectures look more expensive than traditional legacy solutions, when, in fact, people are expecting the opposite.

But in the future, is this the kind of NFV that vendors and integrators really want to recommend to their customers? Is this the kind of NFV deployments operators should accept in their networks, with duplicated components and disparate technologies all over the place?

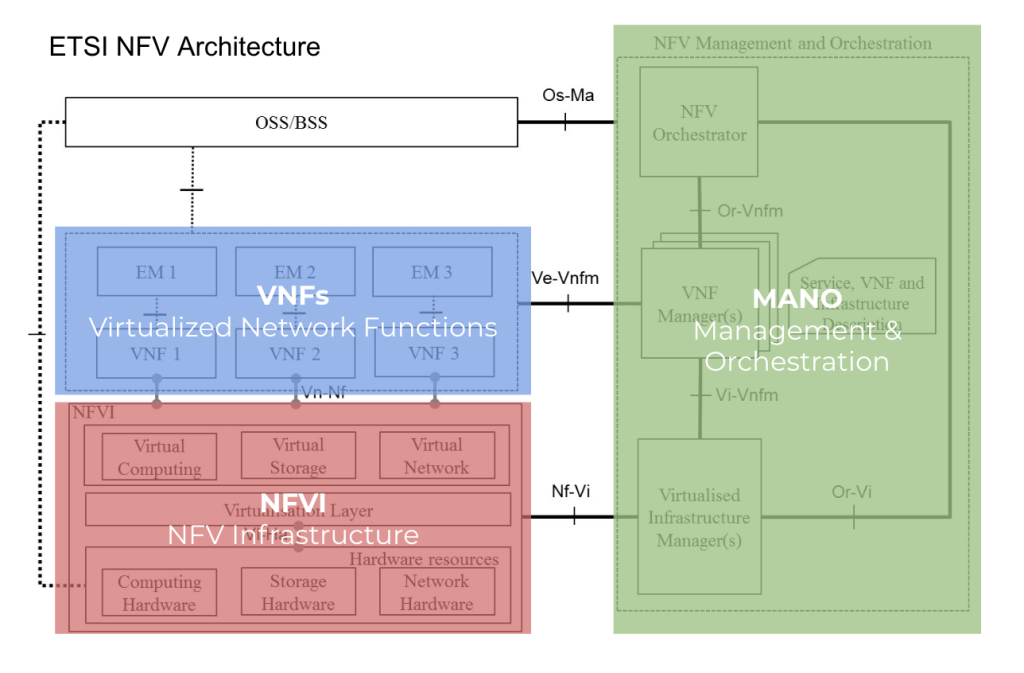

To comply with the NFV vision, operators should lead their NFV deployments and shift gradually from the big appliances world (in all of its forms, including big NFV full stacks) towards “horizontal” NFV deployments, where a common telco cloud (NFVI/VIM) and management (MANO) infrastructure is shared by all the VNFs, with operators having complete control and knowledge of that new telco infrastructure.

Most of the industry believes in that vision, it is technically possible and it’s even more cost-effective, so what is playing against it?

I think we need to admit that the same vendors and integrators will take time to evolve their business models to match this vision. In the meantime, many operators have started to realize they need to invest in understanding new technologies and creating new roles for taking ownership of their infrastructure and even in opening the door to a new type of integrators, those born with the vision of software-defined, virtualized network functions, using commodity hardware and open-source technologies in their ADN.

Three key elements are not only recommended, but necessary for any horizontal NFV deployment to be feasible:

- Embrace the NFV concept, with both technology and business outcomes, ensuring the move away from appliances and/or full single-vendor proprietary stacks towards a commodity infrastructure.

- Get complete control of that single infrastructure and its management and orchestration stack, which should provide life cycle management for all the network services at different abstraction levels.

- Maximize the usage of components based on open-source technologies, as a mechanism to (1) accelerate innovation by using solutions built by and for the whole industry, and (2) to decrease dependency on a reduced set of vendors and their proprietary architectures.

Regarding the latter, OpenStack is playing a key role in providing an software validated by the whole industry for managing a telco cloud as a NFV VIM, while other open-source projects like Open Source MANO and ONAP are starting to provide the management software needed at higher layers of abstraction to control the life cycle of virtualized network service.

In particular, at Whitestack we selected the OpenStack + Open Source MANO combination for building a solution for NFV MANO. If you want to explore why, check the following presentation at the OpenStack Summit 2018 (Vancouver) for details: Achieving end-to-end NFV with OpenStack and Open Source MANO.